The Importance of Robots.txt in SEO

One of the more important aspects of on-site SEO is the maintenance of the Robots.txt file. This is a file that webmasters use to tell crawlers which pages to index and which to leave out. It acts as a guide for web spiders and crawlers.

Hence, every digital marketing agency in India manages the Robots.txt file properly with an aim to keep it up-to-date.

What is the Robots.txt file?

Every major search engine (Google, Yahoo!, Bing) respects the mandate of the Robots.txt file. This file is maintained by webmasters to avoid overloading the site with requests for crawling. Google warns that it is not the right way to keep files out of the indexing. For that, you should use the no-index meta directive. The Robots.txt file, if not used properly, can lead to poor SEO which is very detrimental to your website ranking.

How does the Robots.txt file work?

The Robots.txt file helps crawlers know which pages they should crawl and which they should avoid. By using this technique, you can block duplicate content. You may have two versions of a page, one for mobile and one for desktops, so to avoid duplicate content you may have to mention it in the Robots.txt file. You can also block the “thank you” pages from being indexed, which are shown only after they have submitted some information on your site. You might also want to keep private files from being indexed.

What are the best SEO practices for Robots.txt?

- Create a Robots.txt file

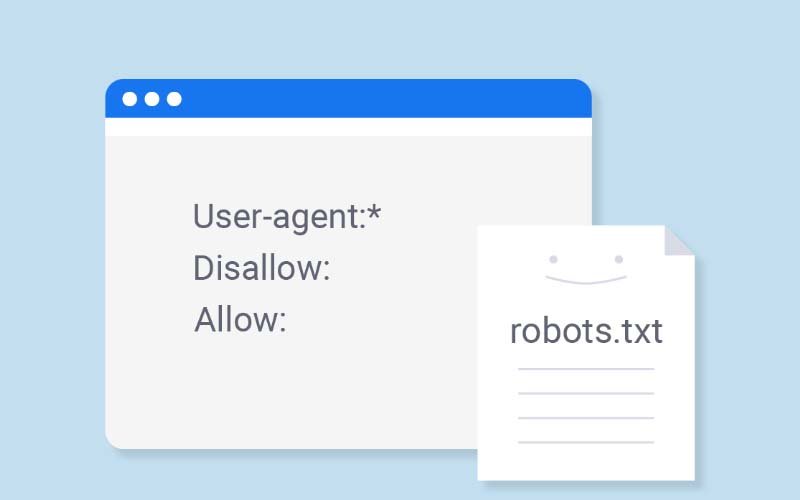

Creating a Robots.txt file is very simple and can be done in Notepad. You have to write it like this:

User-agent: X (this means the search engine crawl bot.)

Disallow: Y (every page that you want to be blocked should be mentioned over here.)

User-agent: *

Disallow: /images

This makes it easier to index the website and leave out things you do not want to be indexed.

- Make the Robots.txt file easy to find

You need to place the Robots.txt file in a proper optimised location. Make the file live and place it in any main directory of your site. Robots.txt file is case sensitive, so you need to rename it to robots.txt. Every digital marketing agency in India will place the robots.txt file in the main directory of your website.

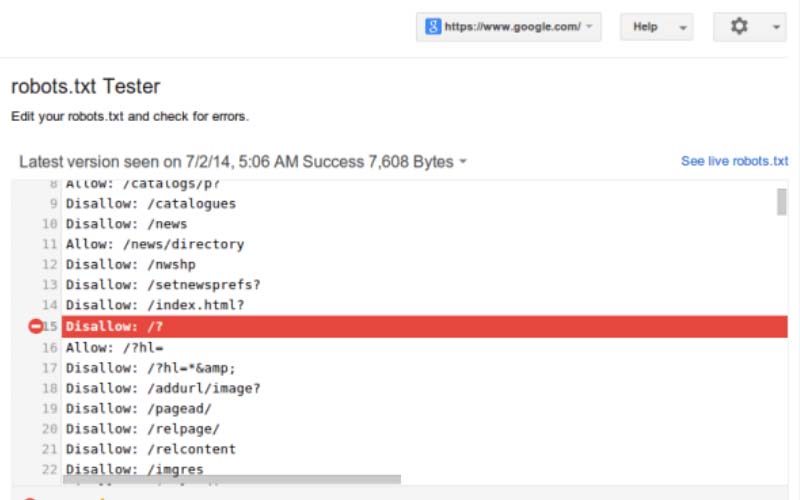

- Check for Errors and Mistakes

Another way to stay SEO optimised with the Robots.txt file is to check it for errors and mistakes. Any errors and mistakes can lead to your website being deindexed. You can use Google’s Robots Testing Tool to make sure you have got your code set up correctly. So, on that note, you can keep your Robots.txt file optimised for a better SEO experience.

Takeaway

You may ask this digital marketing agency in India why you need to use Robots.txtwhen you can use meta directives. The answer is simple. PDFs and videos cannot be blocked from the no-index meta directive.

- Maintain and utilise the Robots.txt wisely as its existence is very essential for SEO.

- You can block your entire site from being indexed as well.

- You can index certain areas of the web pages.

Having said all this, we hope you understand how essential the Robots.txt file is for good SEO optimised websites.